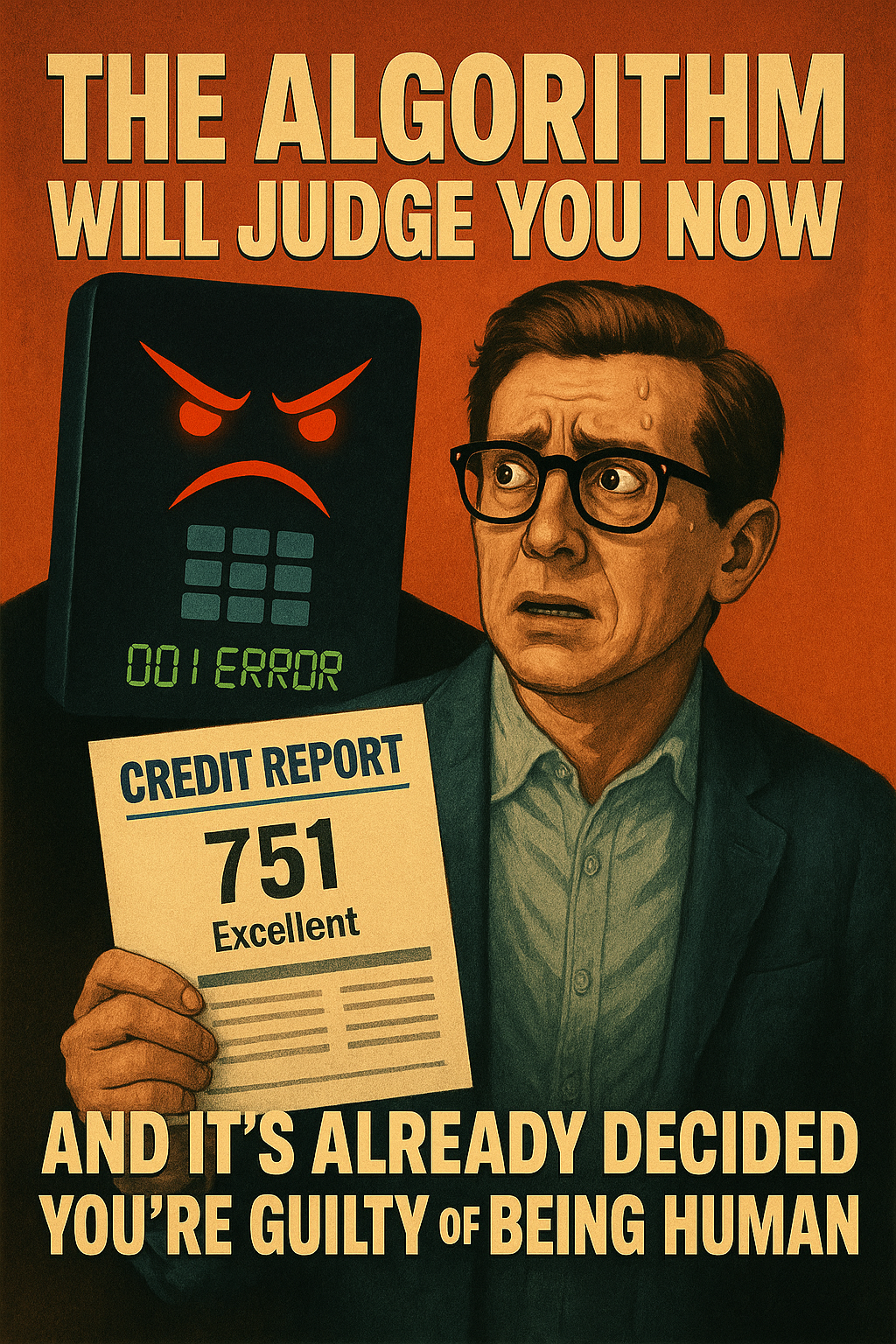

The Algorithm Will Judge You Now

Your credit score dropped three points last week.

Not because you missed a payment or maxed out a card, but because you bought groceries at a different store than usual.

The algorithm noticed.

The algorithm remembered.

The algorithm judged you for having the audacity to shop somewhere new, and now you’re paying for it.

This isn’t the Jetsons, this is a distorted utopia where every click, swipe, and purchase is evidence in a trial you didn’t know you were having, judged by systems you can’t see, using criteria you can’t understand, with no right to appeal.

We’ve handed over the fundamental act of judgment, the thing that makes us human, to mathematical systems that don’t give a shit about context, circumstances, or the fact that sometimes people change their minds, make mistakes, or just want to try a different brand of cereal.

The algorithm will judge you now. And it’s a hanging judge with perfect memory and zero empathy.

The Invisible Courtroom

Every interaction you have with digital systems is simultaneously building a case against you.

Your phone knows you were at a bar until 2 AM on a Tuesday.

Your credit card knows you’ve been buying more coffee than usual.

Your fitness tracker knows you’ve been skipping workouts.

Your search history knows exactly what you were worried about at 3 AM last Thursday.

None of these data points mean anything in isolation. But fed into an algorithmic system designed to predict risk, they paint a picture of someone who’s potentially unreliable, possibly stressed, maybe making poor decisions.

The algorithm doesn’t know you were at that bar because your friend needed someone to talk to after her divorce.

It doesn’t know you’re buying more coffee because you’re working extra hours to pay for your kid’s medical bills.

It doesn’t know you’ve been skipping workouts because you threw out your back moving furniture.

The algorithm just knows you’re deviating from baseline. Deviation equals risk. Risk equals punishment.

The New Credit Score Economy

Your credit score used to be about how well you paid your debts.

Now it’s about how predictably you live your life.

Insurance companies are buying data about how often you shop for groceries, where you buy them, and what time of day you go shopping.

They’re using this information to decide how much you should pay for car insurance.

Because apparently, someone who grocery shops at midnight is a higher risk driver than someone who shops at 2 PM on Sundays.

The algorithm has determined that your shopping schedule is correlated with your likelihood of filing an insurance claim, and that’s all the justification it needs to charge you more.

This isn’t about financial responsibility anymore. It’s about lifestyle conformity.

The algorithm rewards predictability and punishes deviation, regardless of whether that deviation has anything to do with the thing you’re being judged for.

Your landlord is using algorithms to screen potential tenants based on social media activity.

Your employer is using algorithms to decide who gets promoted based on email response times and calendar scheduling patterns.

Your bank is using algorithms to decide your loan eligibility based on where you shop and what you buy.

We’ve created a system where being human, i.e.., inconsistent, emotional, and unpredictable, is treated as evidence of moral failure.

The Automation of Prejudice

Here’s the really insidious part: we’ve automated bias and called it objectivity.

These algorithms aren’t neutral, they’re encoding the prejudices of their creators and amplifying them at scale.

An AI hiring system trained on past hiring data will perpetuate past hiring discrimination. An AI lending system trained on historical loan approvals will perpetuate historical lending discrimination. An AI criminal justice system trained on past arrest records will perpetuate past policing discrimination.

But because it’s math, we pretend it’s fair. Because it’s automated, we pretend it’s objective. Because it’s efficient, we pretend it’s just.

The algorithm doesn’t care that historical data is full of human bias. It just sees patterns and perpetuates them.

It doesn’t judge you based on who you are, it judges you based on who people like you have been, according to data that was biased to begin with.

Your zip code becomes a proxy for your character.

Your shopping history becomes a proxy for your reliability.

Your social network becomes a proxy for your trustworthiness.

The algorithm reduces you to data points and then judges those data points based on patterns it learned from other people’s biased decisions.

The Feedback Loop of Digital Judgment

Even worse, these algorithmic judgments become self-reinforcing.

Get flagged as high-risk by one system, and that judgment follows you to other systems.

Get denied for a loan because of your algorithmic score, and that denial becomes part of your algorithmic score for the next loan application.

Can’t get a job because the algorithm flagged your resume?

Now you have a gap in employment that makes the algorithm even more suspicious next time.

Can’t get insurance at a reasonable rate because the algorithm thinks you’re risky?

Now you have to go with a subprime insurer, which makes other algorithms think you’re even riskier.

It’s digital redlining with perfect memory and no statute of limitations. One algorithmic judgment can cascade through your entire digital life, affecting everything from your ability to get credit to your chances of getting hired to the prices you pay for basic services.

And unlike human prejudice, which is at least visible and can be confronted, algorithmic bias is hidden behind proprietary code and trade secrets. You can’t argue with the algorithm. You can’t explain your circumstances to the algorithm. You can’t ask the algorithm to reconsider.

The algorithm has judged you, and the algorithm’s judgment is final.

The Illusion of Personalization

Tech companies love to talk about personalization, how algorithms create customized experiences tailored just for you.

What they don’t mention is that personalization is just judgment in disguise.

Your “personalized” feed isn’t tailored to you, it’s tailored to people like you. You’re not being seen. You’re being sorted.

Your “customized” shopping recommendations aren’t based on your individual preferences, they’re based on what the algorithm thinks people in your demographic cluster want to buy.

The algorithm isn’t personalizing your experience. It’s categorizing you and then treating you like everyone else in your category.

It’s the opposite of personalization, it’s algorithmic stereotyping.

You think you’re getting a unique, tailored experience, but you’re actually getting fed into the same behavioral modification machine as everyone else the algorithm has decided you’re similar to.

The algorithm has judged you to be a certain type of person, and now it’s going to shape your reality to match that judgment.

The Appeal to a Higher Power

The cruelest part of algorithmic judgment is the illusion of scientific objectivity. When a human makes a biased decision, we can argue with them, appeal to their better nature, present new evidence, or escalate to their supervisor.

When an algorithm makes a biased decision, we’re told it’s just math.

We’re told the computer doesn’t lie.

We’re told the data doesn’t have feelings or prejudices.

We’re told to trust the process.

But algorithms aren’t neutral. They’re made by humans, trained on human-generated data, and designed to optimize for human-defined objectives.

Every algorithm embeds the values, biases, and assumptions of its creators. The difference is that we’ve made those biases invisible and unquestionable.

The algorithm becomes the perfect authority figure, all-knowing, impartial, and beyond appeal.

It’s the technological equivalent of “because God said so,” except God is a bunch of software engineers in Silicon Valley who never had to live under their own systems.

The Quantified Verdict

We’ve reduced human judgment to pattern matching and called it progress.

We’ve automated discrimination and called it efficiency. We’ve created systems that punish deviation from statistical norms and called it risk management.

The algorithm doesn’t judge you based on your actions, it judges you based on its predictions about your actions. It doesn’t evaluate your character, it evaluates your correlation with other people’s data. It doesn’t assess your individual circumstances, it assesses your similarity to statistical clusters.

You’re not being judged by what you’ve done. You’re being judged by what the algorithm thinks people like you might do. You’re guilty until proven innocent, and the proof the algorithm accepts is conformity to its predictive models.

The Resistance

The solution isn’t to make algorithms more fair, it’s to question whether algorithmic judgment should exist at all.

Some decisions are too important to automate. Some judgments require human empathy, understanding, and the ability to consider context that doesn’t show up in data.

We need right-to-explanation laws that require companies to tell you why their algorithms made decisions about you. We need algorithmic auditing requirements that force companies to test their systems for bias. We need human appeal processes that allow you to contest algorithmic decisions.

Most importantly, we need to stop pretending that automation equals objectivity.

Math isn’t neutral when it’s applied to human lives. Algorithms aren’t fair when they’re trained on biased data. Efficiency isn’t justice when it perpetuates discrimination at scale.

The algorithm is judging you right now, scanning your digital footprints, updating your risk scores, adjusting your prices, filtering your opportunities.

It’s making decisions about your life based on patterns it found in other people’s data, using criteria you can’t see, with confidence intervals you’re not allowed to question.

The question isn’t whether the algorithm’s judgment is accurate.

The question is whether we want to live in a world where being human is treated as a bug to be optimized away by systems that will never understand what it means to be human.

The algorithm will judge you now. The only question left is whether you’ll judge it back.

Want more unfiltered takes on how technology is quietly reshaping human judgment? Follow [Futuredamned] for analysis that doesn’t ask permission to question the systems judging all of us.